AI Defeats Captcha Better Than Humans

In the clearest sign that the AI apocalypse is upon us, AI is now significantly better at solving captchas than humans. Captchas are those annoying things you must do when registering for many websites, such as choosing all the bicycles in a set of pictures or sliding the puzzle piece of fit.

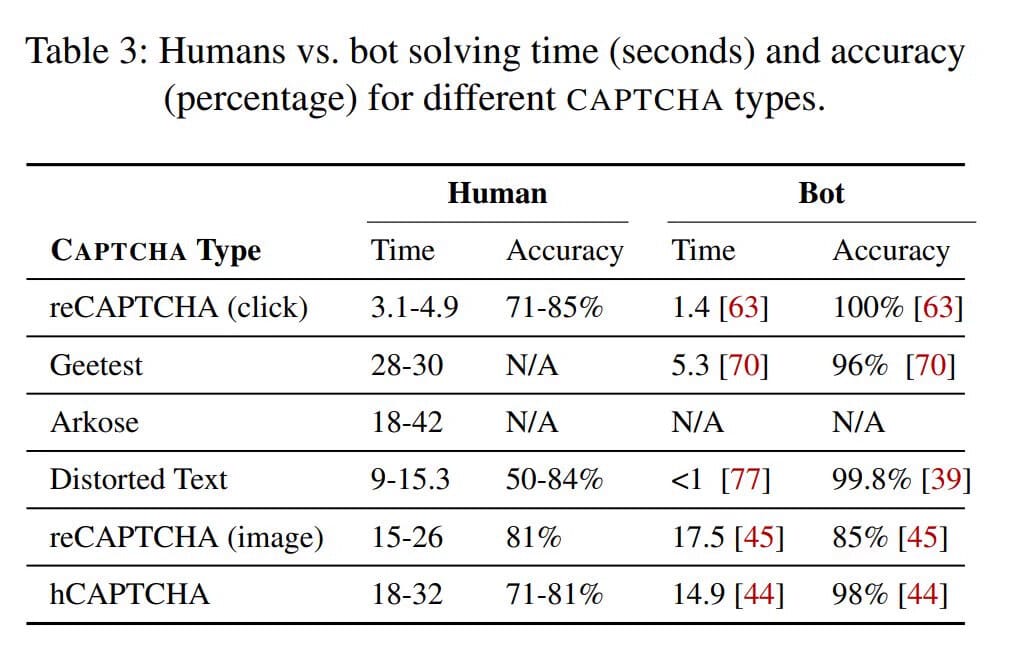

Not only do captchas not work against modern AI, but humans are significantly slower and less accurate.

On the left in the chart above, we have the accuracy and time of humans against different types of captchas. On the right, for bots. Look at the significantly better performance of bots.

Elon Musk, the owner of X.com, formerly Twitter, deals with many bots on his platform, and according to him, the only way to prevent bots at scale is by “subscription,” which means taking a credit card. The only way to stop a spammer is to make it economically infeasible to spam.

LK-99 Superconductor Update

In one of the most disappointing but probably wholly predictable stories, LK99, the much-hyped potentially superconductive material, was all but disproven this week.

If you missed it, which would be surprising since this story was everywhere, a team out of South Korea published a paper claiming to have created a material that was superconductive at room temperature. This would have been the holy grail of energy transfer. But, a story from nature.com has more or less settled the debate.

The first red flag was in the original video, which shows what the article calls “half-baked levitation.” In the video, the edge of the sample seemed to stick to the magnet, and it seemed delicately balanced. Contrastingly, superconductors that levitate over magnets can be spun and even held upside-down. Another signal of superconductivity is a massive drop in resistivity. But, it turns out the temperature needed to achieve that drop is the same temperate at which the material they used undergoes a phase transition, which has the same property of resistivity drop.

All that aside, even though I’m disappointed, watching the entire world rally behind this science with nothing but positive feelings toward it was incredible. Hopefully, this will prompt more people to be interested in material sciences.

Unitree Humanoid Robot

Next, a new human-like robot was released by a company called Unitree. I’ve never heard of Unitree, but looking at their lineup of robots, they look incredibly similar to Boston Dynamics’ robots, but at a much lower price point.

This new humanoid robot stands out because it can be purchased for $90k. The dog-like spot robot from Boston Dynamics is $75k compared to Unitree’s version, which is only $1600. Unitree claims the way they were able to get their price so low is because they developed their in-house lidar.

OpenAI Acquires Minecraft Clone Creator

Just this week, OpenAI acquired a company called Global Illumination, a maker of an open-source game builder that looks like Minecraft. The NY-based company was founded just two years ago, and the acquisition announcement said they will be working on core products at OpenAI. I can think of 100 reasons OpenAI would want game-building technology, but this seems more like an acquihire than anything else. What do you think? Is OpenAI getting into video games? The worlds of video games and AI are crashing together right now, so I wouldn’t be surprised.

OpenAI Burning Cash

Speaking of OpenAI and spending money, there were multiple reports this week that OpenAI’s financial situation is weak. It was reported that they are burning cash quickly, spending $700k a day to keep ChatGPT running, not including other products like GPT4 and Dall-E, and the only reason they are staying afloat is because of the $10b investment from Microsoft.

Although many view this as their apparent fall toward bankruptcy, I don’t think there’s any way that will happen. Not only do they have an incredibly popular product that they are improving monetization for every day, but Microsoft will not let their investment die. Sam Altman and the rest of the OpenAI team are too smart to glide into bankruptcy. Plus, the fact that they are making acquisitions signals that they are bullish on their financial position.

AI Doctors

Microsoft says GPT4 is good enough for medical tasks. An article from The Decoder states, “As an example, Microsoft cites the faster development of cancer drugs, where many clinical trials would have to be abandoned due to insufficient recruitment. Billions of dollars would be wasted in lengthy processes.” And, even though GPT4 was not explicitly trained on medical data, it could structure complex clinical studies according to specified criteria. They also believe GPT4 could achieve expert-level performance on medical question-answer datasets.

And not to be outdone, the open-source community released DoctorGPT, a model trained explicitly on medical datasets. DoctorGPT is a Large Language Model that can pass the US Medical Licensing Exam and is based on the LLama2 7b model. Think about that for a moment, an AI that can fit on most devices without needing the internet that can pass the medical licensing exam. The potential of this technology is enormous. In theory, all of this should increase quality and decrease the cost of medical treatments. For all the joking about AI taking over the world, these types of breakthroughs lead me to believe AI will have a substantial positive impact on the planet.

StableCode

Next, stabilityAI, the company behind stable diffusion, has released a new open-source large language model called Stable Code, specifically trained to be an AI coding assistant. Stablecoder was developed to be directly integrated into developer tools like Visual Studio Code. It’s a tiny model coming in at just 3B parameters. It also has a context window of up to 16k tokens, making it even easier to write large segments of code. I’m a huge fan of all these coding assistants and fine-tuned models for coding because anything that makes developers more productive and efficient is a good thing in my mind.

OpenAI Content Moderation

This week OpenAI released content moderation tools based on their cutting-edge large language models. This is a massive win for websites with user-generated content because OpenAI’s content moderation models are the best. Not only that, it’s super easy to implement.

DoD AI

Next, the Department of Defense has established a generative AI task force. According to a press release from the DOD, this task force is “an initiative that reflects the DoD's commitment to harnessing the power of artificial intelligence in a responsible and strategic manner.” This task force will also integrate AI tools across the DoD. And, of course, whenever you’re talking about defense, there’s the opportunity to use AI for defensive and offensive purposes. I’m sure this group will be responsible for all potential uses of AI within the DoD.

Azure ChatGPT

Microsoft this week announced Microsoft Azure ChatGPT, allowing enterprises to run ChatGPT within their network. Likely feeling the pressure from open-source large language models and the ability to run those models entirely, securely, and privately, Azure ChatGPT promises to be private, controlled, and easy to deploy. Suppose a company is already using Azure Cloud. In that case, deploying Azure ChatGPT within the same security definitions already approved and in place by that organization will be straightforward. This is a smart move by MSFT and OpenAI to start to quell concerns about sending ChatGPT private company data.

Gen2 18-Second Video

Runway, the company behind the incredible text-to-AI-video product Gen2, has increased the length of their clips to 18 seconds. Now you can create an amazing AI video for a full 18 seconds, where we were recently limited to 2 seconds. It’s impressive to see the progress made by the Runway team.

AI Music & Major Labels

Major music labels are not making the same mistakes they did last time a new technology threatened their legacy business model. An article from The Guardian reported that Google and Universal Music are working on licensing voices for AI-generated songs. Early-stage talks are expected to include a potential tool fans could use to make AI-generated songs.

I’m happy to see music labels proactively adopting new technology rather than trying to sue it into oblivion. And, of course, artists would need to opt in to have their voices used with this technology to protect the artist’s work.

This follows a story from earlier this year: a song featuring AI-generated vocals of Drake and the Weeknd, posted by a TikTok user, was pulled from streaming services by Universal Music Group for “infringing content with generative AI.”

I believe AI-generated music will be an essential genre and will open up creativity for anyone to take advantage of. The outcome will likely be a large volume of low-quality songs, but the incredible few might make the whole technology worthwhile.

Autonomous Agents

This week, two new open-source AI autonomous agents projects were released. First, out of Stanford, the paper that started it all, Generative Agents: Interactive Simulacra of Human Behavior. This paper showed that when simulating Autonomous AI agents, they exhibit human-like behavior, including planning, forming habits, personalities, and relationships.

And now, they released the code that powered their system. I make a tutorial video for how to get their code up and running, so definitely check it out. Then, inspired by the work from this paper, a16z, the premier venture capital firm, released its own version of an AI simulation. I also plan on making a video about this. AI simulations have incredible potential in many areas, especially video games. Imagine your favorite video games, from GTA to Skyrim, having NPCs that live entire lives, even when you’re not interacting with them. This truly can change how video games work forever.

Google AI

Next are two stories about Google AI. It looks like AI features are coming directly to Google Search. Features such as seeing definitions of words just by hovering to summarize websites before actually visiting them, the outcome is a much more efficient search.

However, I don’t think content owners will be happy about this as Google Search with AI makes it much less necessary to click through to a website, which means less traffic and revenue for website owners. This is similar to YouTube implementing AI to summarize youtube videos. AI creators and content owners seem to be heading toward a fight, so seeing how this plays out will be interesting. Google is also looking to incorporate AI features directly into ChromeOS. Google intends to directly bring AI writing and editing tools into the Chrome operating system. Right now, this is a confidential product and will ship with three main pieces of functionality: rewriting text, preset prompts to be used, and insert, which allows users to have AI continue their writing in their style.

AI Safety

Our last story is about AI safety. In an article from AP News, it was reported to “not expect quick fixes in ‘red-teaming’ of AI models. Security was an afterthought”. White House officials are worried that AI chatbots are progressing so quickly that AI companies aren’t taking the time to properly vet their safety. Competition in the AI market is beyond fierce, so companies are forced to cut corners and rush products to market before they are thoroughly analyzed for safety.

According to the article, the main concern is that conventional software uses well-defined code to issue explicit, step-by-step instructions. OpenAI’s ChatGPT, Google’s Bard, and other language models differ. Trained primarily by ingesting — and classifying — billions of data points in internet crawls, they are perpetual works-in-progress, an unsettling prospect given their transformative potential for humanity. Given previous stories I’ve covered, it is incredibly easy to poison a large language model simply by changing a fraction of a percentage of its data. Security and safety concerns are real and should be taken seriously. Leading AI companies invest tons into security and safety, but do you think it’s enough? Let me know!