Integration of OpenAI's Large Language Models set to transform the way developers access and utilize knowledge on Stack Overflow's platform

Stack Overflow and OpenAI have announced a partnership involving integrating Stack Overflow's highly technical knowledge with OpenAI's AI models, including ChatGPT. This collaboration aims to enhance AI tools using a data foundation from Stack Overflow's extensive developer community. It will result in the development of new integration capabilities set for release in the first half of 2024. The partnership will improve developer experiences on both platforms, providing users with trusted and vetted content.

Sponsor

Vultr is empowering the next generation of generative AI startups with access to the latest NVIDIA GPUs.

Try it yourself when you visit getvultr.com/forwardfutureai and use promo code "BERMAN300" for $300 off your first 30 days.

Meet MAI-1: Microsoft Readies New AI Model to Compete With Google, OpenAI - Microsoft is developing a new AI model named MAI-1, spearheaded by former Google AI leader Mustafa Suleyman, aiming to compete with major AI models like Google's and OpenAI's. MAI-1, distinct from previous Inflection technologies, is projected to have 500 billion parameters, necessitating significant computational power and extensive training data. This development marks a strategic diversification for Microsoft, pursuing small-scale, mobile-friendly models and large, state-of-the-art AI systems. The model's debut could potentially align with Microsoft's Build developer conference, depending on the progress in the upcoming weeks.

Sam Altman says helpful agents are poised to become AI’s killer function - OpenAI plans to transcend its existing AI applications like DALL-E, Sora, and ChatGPT with more sophisticated technology that can assist with real-world tasks beyond a chat interface. Sam Altman, OpenAI's CEO, envisions a future where advanced AI could operate in the cloud without needing new hardware, though he hints that purpose-built devices, like those from Humane, might enhance the experience. Addressing the challenge of training data scarcity, Altman remains hopeful for a solution that moves away from the necessity of ever-increasing data inputs, drawing parallels to human learning capabilities without detailing a specific path forward.

Sam Altman calls ChatGPT dumbest, hints at GPT-6 and why he is willing to spend $50 bn on AGI - OpenAI's CEO Sam Altman recently characterized GPT-4 as the "dumbest model" in terms of OpenAI's ambitions towards Artificial General Intelligence (AGI), indicating the swift advancement anticipated in AI development. During a session at the Entrepreneurial Thought Leader series at Stanford University, Altman and lecturer Ravi Belani discussed the future of AI, including the capabilities and costs associated with GPT-3. Altman refrained from revealing the actual costs but emphasized the value of providing potent tools for innovation. Looking ahead, Altman shared his expectations for GPT-5 to significantly surpass GPT-4 in intelligence, underscoring a consistent trend of rapid AI evolution. He also stated his commitment to investing substantial resources into AGI, with a willingness to spend billions to create societal value.

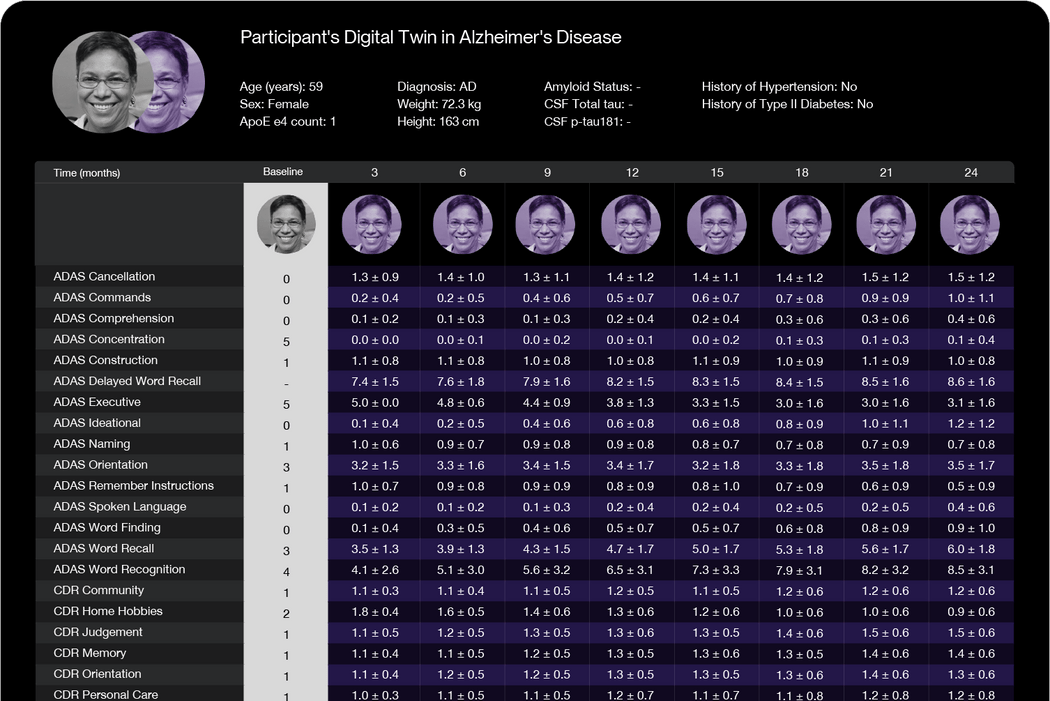

The AI-Generated Population Is Here, and They’re Ready to Work - AI-generated digital humans, or "digital twins," are being developed to perform various tasks, including work, with the potential to revolutionize the workforce. These digital humans are created using advanced AI and machine learning algorithms, allowing them to mimic human-like behavior and interactions. They can be trained to perform tasks more efficiently and accurately than humans, freeing up human workers for more creative and high-value tasks. However, the use of digital humans also raises concerns about job displacement and the need for new policies and regulations.

The first music video generated with OpenAI’s unreleased Sora model is here - OpenAI showcased an AI model named Sora capable of creating high-quality, realistic video clips, sparking excitement and concern in the creative community. This tool remains private, with selective early access granted to professionals for harm and risk assessment. Filmmaker Paul Trillo premiered a music video for Washed Out using Sora, crafted from 55 clips out of 700 possibilities. The video's seamless zoom-effect highlights Sora's text-to-video generation prowess, contrasting with other projects using additional VFX tools. Adobe plans to integrate AI models like Sora into Premiere Pro, signaling a shift towards AI-assisted video editing.

Microsoft bans US police departments from using enterprise AI tool for facial recognition - Microsoft has updated the terms of service for its Azure OpenAI Service to prohibit U.S. police departments from using the platform for facial recognition purposes. This includes any potential image-analyzing models currently or in the future developed by OpenAI. The clarified policy also globally restricts law enforcement from using real-time facial recognition on mobile cameras in unstructured environments. The ban on Azure OpenAI Service is specific to the U.S. and does not prevent facial recognition with stationary cameras in controlled settings or international police use. The update aligns with Microsoft and OpenAI's recent engagements with defense-related AI projects, including with the Pentagon and the Department of Defense.

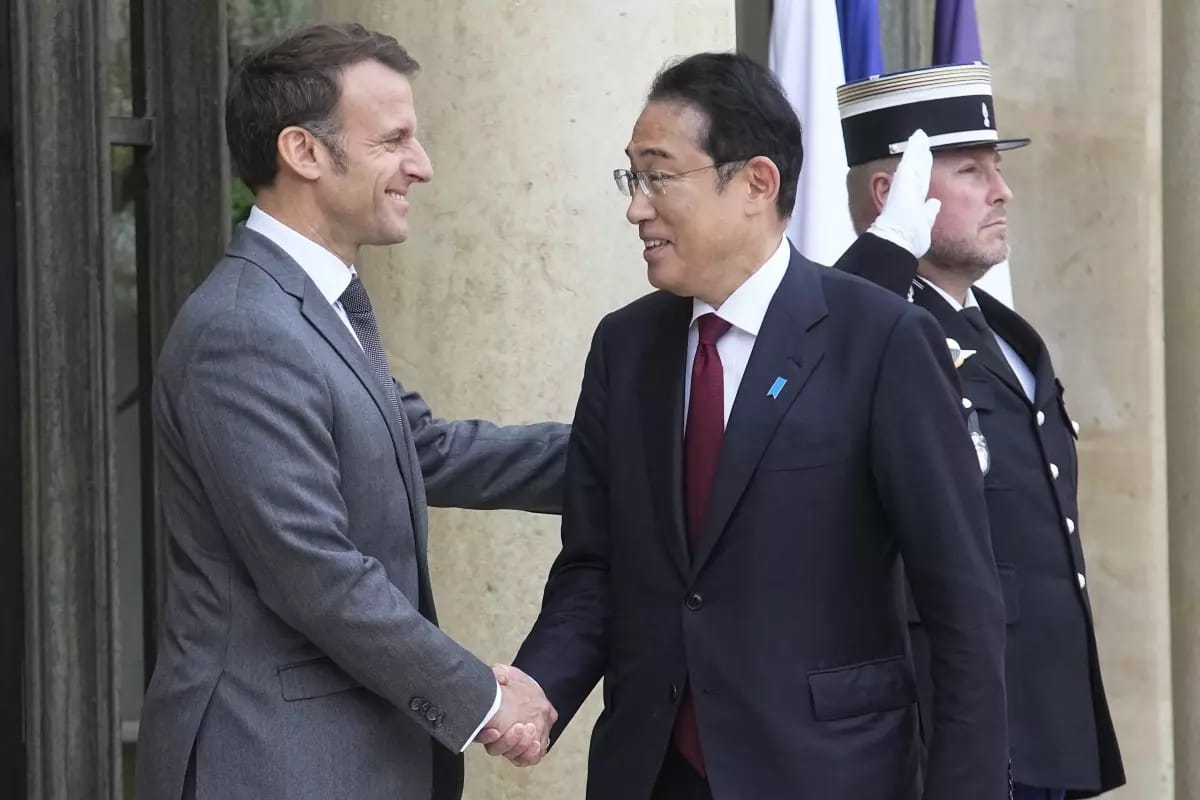

Japan's Kishida unveils a framework for global regulation of generative AI - Japanese Prime Minister Fumio Kishida, during his visit to the Paris-based Organization for Economic Cooperation and Development, introduced an international initiative for governing generative AI. With the aim of addressing potential risks such as disinformation, the framework builds on efforts from Japan's G7 chairmanship, involving the Hiroshima AI Process for establishing guiding principles and a code of conduct for AI development. The voluntary group named Hiroshima AI Process Friends has garnered the support of 49 countries and regions. Kishida emphasizes the importance of global cooperation to ensure AI's safety, security, and trustworthiness for worldwide benefit amidst international races to regulate AI technology.

How Will AI Investment Affect Big Tech Profits? It May Be Hard to Find Out - The big tech firms Microsoft, Alphabet, Meta Platforms, and Amazon have recently posted higher profit margins, primarily due to cost reductions, allowing them to invest heavily in AI development. These investments are difficult to measure in the short term since they are accounted for as capital expenditures rather than immediate expenses, delaying their impact on profit statements. Executives from these companies, including Microsoft's CFO, have warned that operating margins may decline due to increased depreciation from these investments. Despite these challenges, Microsoft has reported revenue growth from its AI products, and other companies are also seeing potential revenue increases from new AI initiatives, although the overall financial benefit remains uncertain.

Four start-ups lead China’s race to match OpenAI’s ChatGPT - In the past three months, four Chinese AI startups—Zhipu AI, Moonshot AI, MiniMax, and 01.ai—have reached valuations between $1.2 billion and $2.5 billion, reflecting a surge in China's domestic investment in generative AI technologies. These companies compete intensely for talent and resources to develop foundational models and gain a foothold in a market that lacks access to prominent U.S. AI applications like ChatGPT. Collectively, these startups raised approximately $2 billion in early 2024, with a notable emphasis on AI chatbots and avatar applications that require less computational power due to U.S. restrictions on advanced chip exports. Despite the rapid growth and innovation, the AI landscape in China remains highly competitive with no clear market leader, pushing these startups to adapt and innovate to differentiate themselves continuously.

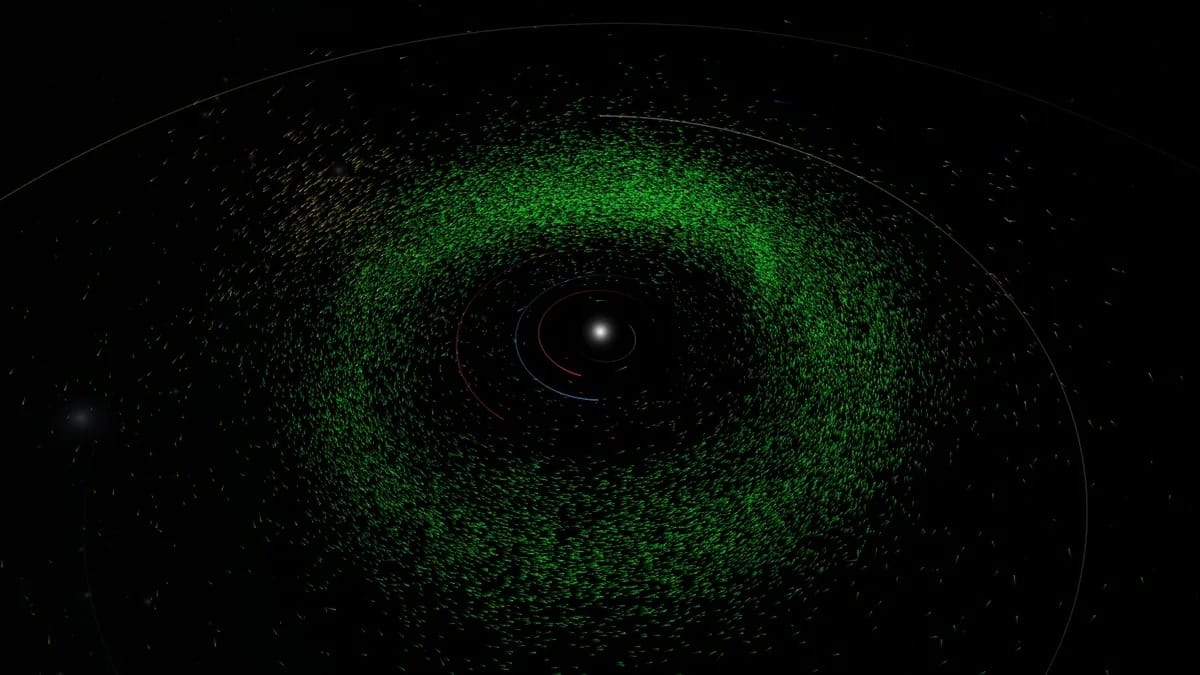

AI discovers over 27,000 overlooked asteroids in old telescope images - A new AI algorithm, THOR, has led to the discovery of over 27,000 previously overlooked asteroids in archived telescope imagery. This advancement boosts our ability to identify and track asteroids, critical for early detection of potential Earth threats. Among the newly identified space rocks, 150 have orbits intersecting with Earth's, with none posing an imminent collision risk. The B612 Foundation and Asteroid Institute scientists utilized Google Cloud to amplify THOR's capabilities, demonstrating a paradigm shift in astronomical practice. The technology promises to enhance global telescope effectiveness in asteroid detection. With the upcoming Vera C. Rubin Observatory's aid, scientists anticipate discovering around 2,000 more potentially hazardous asteroids, potentially doubling the current catalog in six months.

Awesome Research Papers

KAN: Kolmogorov-Arnold Networks - The abstract introduces Kolmogorov-Arnold Networks (KANs), inspired by the Kolmogorov-Arnold representation theorem, as a potential improvement over Multi-Layer Perceptrons (MLPs). KANs differentiate from MLPs by having learnable activation functions on edges and no linear weights, utilizing splines instead. They are touted as offering better accuracy with smaller network sizes, faster neural scaling laws, and enhanced interpretability. KANs have also shown promise in collaborating with experts in mathematics and physics to (re)discover underlying laws in these fields. The research suggests that KANs could be a significant step forward for deep learning models that currently depend on MLPs.

LoRA Land: 310 Fine-tuned LLMs that Rival GPT-4, A Technical Report - The paper discusses Low Rank Adaptation (LoRA), a method for efficiently fine-tuning Large Language Models (LLMs) by reducing trainable parameters and memory usage without sacrificing performance. A study involving 310 models across 10 base models and 31 tasks shows that models fine-tuned with 4-bit LoRA surpassed base models and GPT-4 in performance. It also explores the most effective base models for fine-tuning and examines task complexity heuristics to predict fine-tuning success. Additionally, the website introduces LoRAX, an open-source inference server that supports deploying multiple LoRA fine-tuned models on a single GPU and showcases LoRA Land, a web application running 25 specialized LLMs on one NVIDIA A100 GPU, emphasizing the efficiency and cost-effectiveness of specialized models over general-purpose LLMs.

Prometheus 2: An Open Source Language Model Specialized in Evaluating Other Language Models - The content presents a study addressing the limitations of current open-source Language Models (LMs) used for evaluating responses, which suffer from a lack of alignment with human judgment and inflexible assessment methods. It introduces Prometheus 2, an advanced evaluator LM that better aligns with human and GPT-4 judgments and supports a broader range of assessment types, including direct assessment and pairwise ranking, with customizable evaluation criteria. This model outperforms existing open LMs in correlating with human judgements across multiple benchmarks. All resources related to Prometheus 2, including models, code, and data, are made publicly accessible.

Better & Faster Large Language Models via Multi-token Prediction - The research introduces an efficient training methodology for large language models, proposing that predicting multiple future tokens simultaneously increases sample efficiency. This technique uses multiple output heads for n-token predictions atop a shared trunk, enhancing model performance without additional training time. The approach demonstrates notable improvements in downstream tasks, especially in code generation, with the 13B parameter models showing marked gains over traditional models in problem-solving benchmarks. Furthermore, the models employing this multi-token prediction strategy exhibit up to threefold faster inference speeds, maintaining their effectiveness over extended training periods and diverse task sizes.

A Careful Examination of Large Language Model Performance on Grade School Arithmetic - Grade School Math 1000 (GSM1k) was created to critically examine the mathematical reasoning abilities of large language models (LLMs), addressing concerns of dataset contamination rather than genuine skill. GSM1k is comparable to the benchmark GSM8k in style and complexity, with similar metrics like human solve rates and answer complexity. An evaluation of LLMs on GSM1k indicated up to a 13% accuracy drop, revealing potential systematic overfitting in models such as Phi and Mistral, while frontier models like Gemini/GPT/Claude exhibited less overfitting. Analysis showed a moderate correlation (Spearman's r^2=0.32) between models' tendencies to reproduce GSM8k examples and their performance disparities, hinting at partial memorization of GSM8k by many models.

Researchers at NVIDIA AI Introduce ‘VILA’: A Vision Language Model that can Reason Among Multiple Images, Learn in Context, and Even Understand Videos - Researchers from NVIDIA AI and MIT have introduced VILA, a visual language model that can reason among multiple images, learn in context, and even understand videos. VILA focuses on effective embedding alignment and utilizes dynamic neural network architectures, pre-trained on large-scale datasets like Coyo-700m. The model has demonstrated significant results in improving visual question-answering capabilities, achieving high accuracy on benchmarks like OKVQA and TextVQA. VILA's ability to retain prior knowledge while learning new tasks makes it a promising development for various applications.

OpenVoiceV2 - OpenVoice V2 enhances the original version with improved audio quality and native support for six languages. It offers the same features as V1 with the added bonus of MIT License freedom for commercial use. Key features include accurate tone color cloning, flexible voice style control, and zero-shot cross-lingual voice cloning. OpenVoice V2 retains ease of use, with detailed instructions and support for multiple operating systems provided by community-contributed guides. The setup process remains consistent across versions, and examples are available to demonstrate the product's capabilities. OpenVoice is particularly adept at generating multilingual speech with customizable accents and emotions, without requiring the targeted language in the training dataset.

Awesome New Launches

Anthropic Introduces the Claude Team plan and iOS app - Claude introduced a new Team plan and an iOS app to enhance productivity tools for businesses and individuals. The Team plan, at $30 per user per month, caters to workgroups requiring higher usage, a suite of AI models tailored for varied business cases, and advanced admin features for user and billing management. It expands upon the Pro plan offerings, including 200K context window for processing long documents and multifaceted discussions. Concurrently, the Claude iOS app has been launched, offering users the convenience of syncing with web chats, vision capabilities for image analysis, and consistent accessibility across all devices and plans. The app is free for all Claude users and aims to provide a flexible and powerful AI-driven experience on the go.

Introducing `lms` - LM Studio's companion cli tool - LM Studio has released version 0.2.22 alongside the inaugural version of its command-line interface (CLI) tool, 'lms'. This companion tool enhances user interaction with LM Studio by enabling model management tasks such as loading and unloading models, controlling the API server, and inspecting raw language model (LLM) input. Users can get 'lms' with LM Studio and find it in the LM Studio directory. It updates alongside the main software and can be built from source. An initial setup requires running LM Studio once and bootstrapping 'lms' via terminal commands. The tool’s capabilities cover a range of commands for managing local servers, models, and debugging workflows. It also features a streaming log function for detailed prompt analysis. 'lms' is MIT Licensed, under development on GitHub, and can be extended through the 'lmstudio.js' library, currently in pre-release alpha. Discussions and resources are available on the LM Studio Discord server and official website.

Amazon Q, a generative AI-powered assistant for businesses and developers, is now generally available - Amazon Web Services (AWS) has announced the general availability of Amazon Q, an advanced generative AI-powered assistant aimed at transforming software development and enhancing access to business data. It is designed to aid developers in a broad spectrum of tasks, including coding, testing, debugging, and managing AWS resources efficiently, potentially increasing productivity by over 80%. In addition, Amazon Q Business provides tools for employees to query enterprise data repositories, generate reports, and build AI-powered apps without coding experience. AWS is also offering two free, self-paced courses to help workers leverage Amazon Q and has introduced Amazon Q in QuickSight for creating BI dashboards using natural language. The initiative is part of Amazon’s commitment to offering free AI skills training to 2 million individuals by 2025.

GitHub Copilot Workspace: Welcome to the Copilot-native developer environment - GitHub has introduced Copilot Workspace, an advanced development environment that aims to transform the coding process by integrating natural language into various stages of software creation. After the successful deployment of GitHub Copilot, which enhanced productivity by up to 55%, the platform now offers a Copilot-native environment where tasks begin with AI assistance right from conceptualization within a GitHub Repository or Issue. It suggests a step-by-step plan which is fully editable, enabling developers to modify and finalize code with reduced cognitive load. This workspace can be run on any device, fostering collaboration through shareable workspaces and supporting the entire development cycle from planning to code review.

Gradient AI launches LLama-3-8B-Instruct-262k - Gradient AI focuses on creating advanced AI models to support business operations with autonomous assistants using a company's data. Their latest update features improvements in chat functionality for their custom-developed model which extends the context length capabilities of the base LLama-3 8B model, emphasizing efficient use of computational resources in training. The model update utilizes innovative techniques such as NTK-aware interpolation and a new data-driven optimization for RoPE theta, achieving superior performance with less data. Infrastructure-wise, they leverage the EasyContext Blockwise RingAttention library to manage extended token contexts on Crusoe Energy's high-performance clusters.

Hugging Face launches LeRobot open source robotics code library - Hugging Face has recently recruited Tesla's former staff scientist Remi Cadene to spearhead an open source robotics project. They unveiled LeRobot, a toolkit accessible on Github aimed at democratizing AI robotics and fostering innovation. LeRobot is a versatile framework with a library for data sharing, visualization, and training AI models. It supports a broad range of robotic hardware and integrates with virtual simulators. As part of this initiative, Hugging Face is also compiling a massive, crowdsourced robotics dataset in collaboration with various entities.

JPMorgan Unveils IndexGPT in Next Wall Street Bid to Tap AI Boom - JPMorgan Chase & Co. has introduced IndexGPT, a new range of thematic investment baskets developed using OpenAI's GPT-4 model, marking the bank's latest effort to leverage the AI boom on Wall Street. The tool automates the creation of thematic indexes by generating keywords related to a theme and scanning news articles to identify relevant companies, aiming to identify investments based on emerging trends rather than traditional metrics. Despite the recent decline in interest for thematic funds, JPMorgan's IndexGPT aims to offer a more efficient stock selection methodology for institutional clients and those looking to capitalize on emerging themes.

Anduril announces Pulsar family of AI-enabled electromagnetic warfare systems - Anduril Industries has introduced Pulsar, a state-of-the-art electromagnetic warfare system designed for modern battlefields. Pulsar leverages artificial intelligence to swiftly recognize and counter a variety of threats, specializing in drone and jamming technologies. The system features a software-defined radio and advanced computing to enable real-time responses and adaptability. It is compatible with ground and aerial platforms, offering electronic countermeasures and other advanced capabilities. The modular Pulsar system promotes rapid operational deployment and seamless integration with existing command-and-control frameworks, ensuring up-to-date defenses against both conventional and emerging threats.

PanzaMail - Panza is an email assistant that learns and replicates a user's writing style through a customized training process using past email data. It leverages a fine-tuned Language Model (LM) and a Retrieval-Augmented Generation (RAG) component to generate relevant content. Panza operates entirely locally, requiring significant computational resources (GPU with 16-24 GiB memory), with a forthcoming CPU-only option. Setup involves exporting emails to .mbox format and running through a series of scripts, with necessary tools like Python knowledge and Hugging Face model access. It supports efficient training methods and allows for a GUI or CLI interface for using the personalized email model. Panza is developed by the IST Austria team and offers a user-focused email writing tool without sharing data externally.

SuperpoweredAI/spRAG: RAG framework for challenging queries over dense unstructured data - spRAG is an advanced RAG (Retriever-Generator) framework designed to enhance open-book question answering by comprehending unstructured data such as financial reports, legal documents, and academic papers. It boasts a remarkable 83% accuracy on the intricate FinanceBench benchmark, outperforming standard RAG systems by a wide margin. spRAG incorporates two innovative methods to elevate its performance: AutoContext and Relevant Segment Extraction (RSE).