I just published a video explaining why I believe programming as a profession and industry will be obsolete sooner than we think, all due to AI. Here’s a recap of everything I spoke about. These ideas were inspired, in part, by Matt Welsh’s talk on the same subject.

This post is sponsored by ServiceNow:

ServiceNow helps companies automate repetitive tasks to save time and increase efficiency. And they are on the cutting edge of leveraging AI to do so.

Be sure to check out ServiceNow, Click here for more information: https://bit.ly/3Rag9Uk

Three Things I Know Are True:

First, coding is by far the best skill set that I ever learned. I started my programming career back in high school and was always obsessed with video games, computers, and wanting to know how they worked. The idea that I could build my own video game blew my mind. So, I did everything I could to learn how to code myself. When I got a little bit older, right out of college, coding allowed me to take my ideas and build whatever I wanted. I didn't have to rely on anybody else, and this was really key. I was always thinking of new ideas and wanted to build something. Having to rely on somebody else to build my vision just did not work for me. I could really build as quickly as my passion drove me to do so.

That led me, after multiple failed startups, to one that was actually successful: my previous company, called Sonar. I started Sonar by myself, wrote all of the initial code, got the first customers, and then, of course, I brought on an incredible team to help me build it into what it became. But it all started with a conversation with a friend of mine who had this problem. I told them, "Hey, let me try to solve that for you using software." That led to the next 10 years of my life building out that company. But the key is, I was able to just have a conversation, and then, on the same day, I was building.

The second thing I know for sure is that coding is very hard, even after 20-plus years of doing it. It is extremely difficult for me. For instance, Python package management continues to be the bane of my existence, and it seems to be hard for most people. For those who can get over the fear of even starting to learn it, there's a huge learning curve. Coding requires you to think through every possible permutation of a problem, for, in some cases, extremely complex systems. Then, you have to write down all of these instructions in a language that is very foreign and doesn't come naturally. Of course, bugs are really hard to see when you're actually in the code.

Number three: Because of everything I mentioned, humans are just really bad at coding, or at least most are. The human brain isn't really wired to keep track of every single edge case, every single permutation of a complex system. That's why we write code in little batches and then try to put all of these different batches together.

Coding Was Even Worse Before

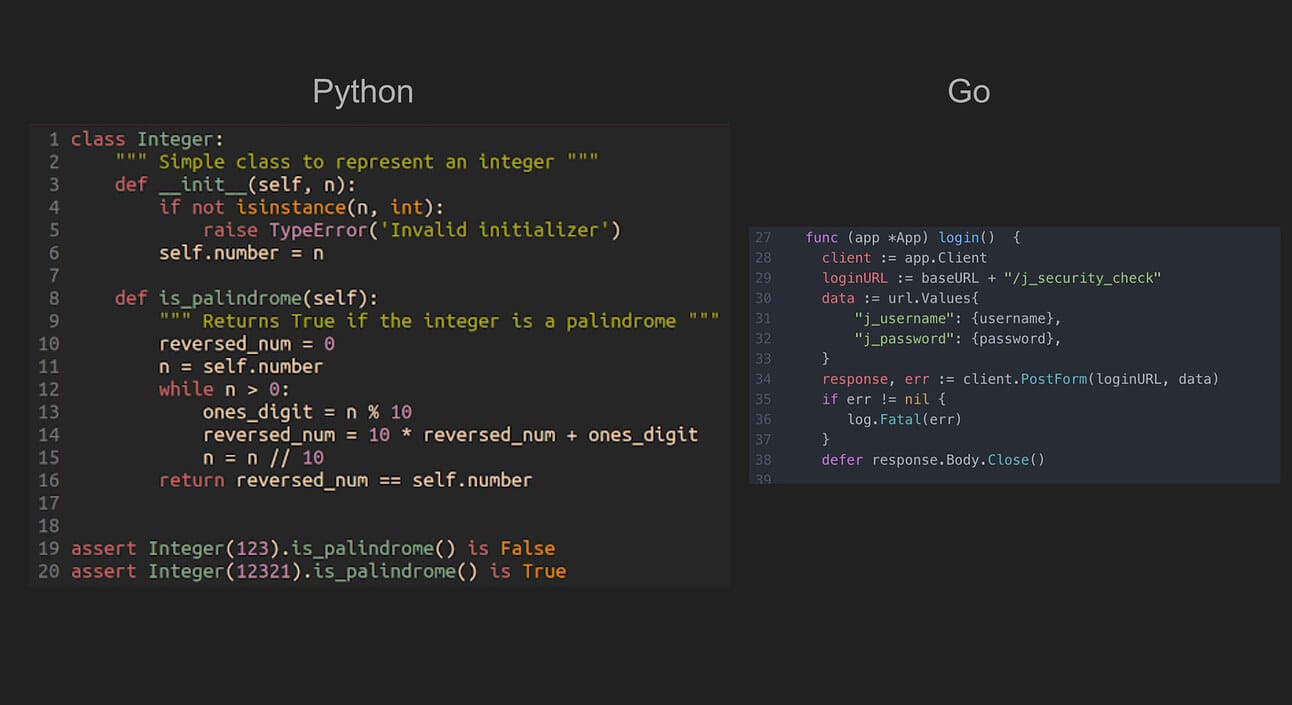

If you think coding is hard today, you have no idea how hard it was back in the day. Look at these two pictures: on the left, we have computers that basically took up entire rooms. Then, on the right, software had to be physically punched into these cards. Of course, it got a little easier. We started with brittle, unforgiving syntax, manual memory management, and thinking about every single byte. But it got easier. We now have expressive languages, dynamic languages, metaprogramming, automatic memory management, and a wide range of very powerful tools to help us, because we are so bad at coding.

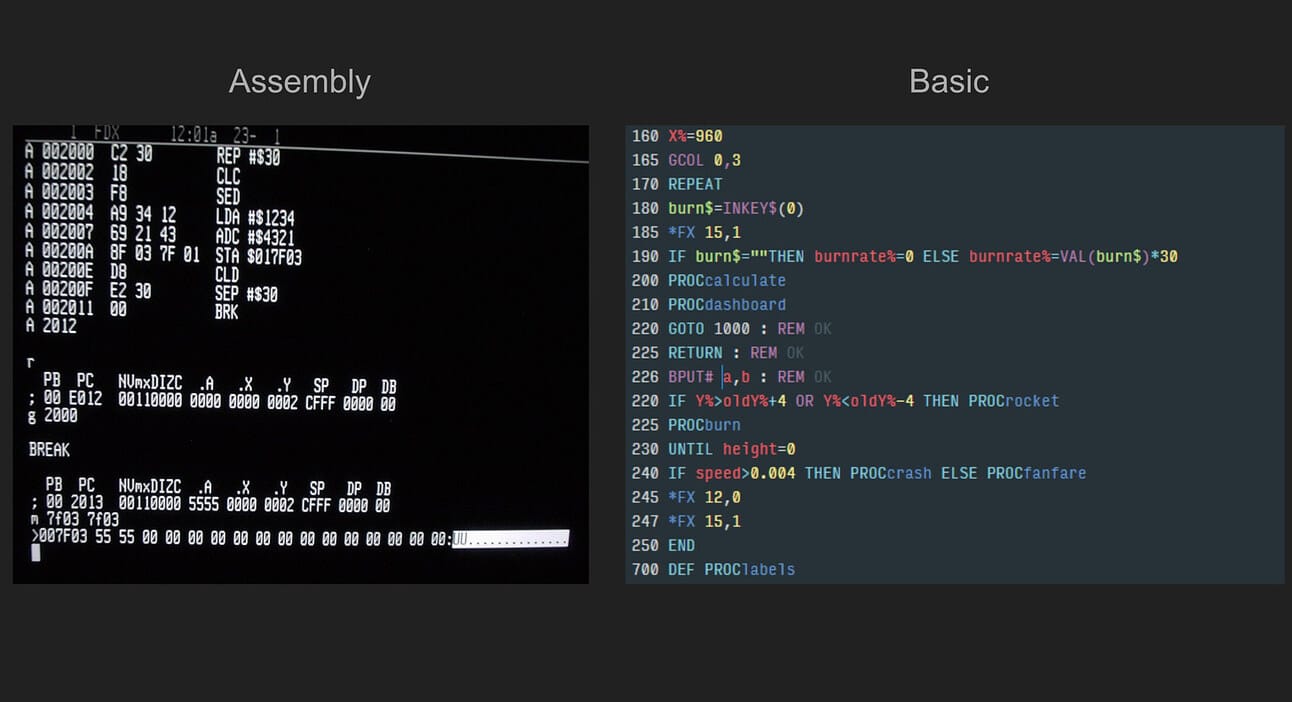

Look at what we started with. On the left, we have assembly code, and it is not friendly to humans. Then we got BASIC, and it became a little bit easier; you can start to see it looks a little bit like natural language. Then we had C and Java, JavaScript, and things became easier. We had more libraries and were able to have object-oriented programming. We started to craft programming in a way that made it easier for humans to understand. And now, we have more modern programming languages like Python and Go, which, if you look at them, they look pretty similar to natural language. Obviously, it's not perfect, and if you miss a character here and there, the software is still not going to work, but it is definitely a lot better than it used to be.

Coding Has Gotten Easier

Definitely, it's a lot better than it used to be. We've even had enormous advancements in coding tools. We now have better IDEs, and engineers can have every customization they could possibly think of. We have linters, cloud editors so you can collaborate with other people anywhere in the world, versioning to keep track of our progress, and the ability to revert changes if we make a mistake. We have automation, tests, package management (even though that doesn't really work all that well still for Python), syntax highlighting, and potential bug warnings. I mean, we really do have a wide range of tools that we can use today. But programming is still super hard.

Github Copilot Changed Everything

Then, in 2022, GitHub Copilot changed everything. For $10 a month, you can have an AI assistant to help you write code. It was incredibly impressive right away. Just write a few words, and it will complete entire methods for you. There were enormous productivity gains from this, and better code was being written. I really cannot overstate how important GitHub Copilot is. When we look back at the history of programming evolution, GitHub Copilot is going to be an inflection point.

Copilot is not just guessing at what you're writing; it's looking at the context of the file, the context of the entire code base. It truly is incredibly impressive. In Matt Welsh's talk, he gives a great analogy: the way that GitHub Copilot changed programming pretty much overnight is analogous to if we had Pong and then, all of a sudden, a month later, Red Dead Redemption 2. That type of graphics evolution is essentially what we got with programming with GitHub Copilot.

There are a lot of reasons why GitHub Copilot had such a big productivity gain, and I can't imagine programming without it anymore. It allows me to stay in the zone of programming. So, a day before Copilot came out, if I had to write some code and I wasn't sure how to write it or didn't know how to access a piece of data in a JSON object, I would go to Stack Overflow, Google it, try to figure it out, and it would take a lot of time. I would essentially leave my IDE, lose my train of thought, go figure it out, and then try to get back into the flow of coding. But now, I don't have to do that. All I do is either type out what I want, and it creates it for me, or I start typing what I want, and it will just fill out the rest. It greatly reduces the amount of trial and error, and you don't have to remember every single syntax. You can just start writing something, and it will fill out the rest.

Coding has become so much less tedious because of GitHub Copilot. So, let me show you what my coding workflow is. Granted, I'm not building production-level applications anymore. I haven't done that in a couple of years, but I am still coding every single day, testing out AI projects. So, I still do a lot of coding.

My Coding Workflow

First, I ask ChatGPT to write me some code. I am not shy about admitting that this is the first thing I do, especially with Python, because prior to about a year ago, I had no experience with Python. I just ask ChatGPT to write it for me. Then, I paste it into my codebase and test if it works. After that, I basically weave the different pieces together with Copilot. Copilot fills in all the gaps for me. And this is me in the middle, the orchestrator. That's all I do. I'm basically orchestrating AI to write software for me.

AI Is Just Better At Coding

It turns out AI is actually much better at coding than humans for a lot of reasons. First, let's say you're a software company and you can either hire a human with a salary, who takes breaks, who has wants and needs, who needs benefits, health insurance, onboarding time — I mean, the list goes on. Versus, you can just easily spin up a new AI to write more code. AI scales horizontally incredibly easily.

AI coders are not only much faster at coding, but they're much less expensive too. Software engineers take years of training to become good. AI is going to be trained once, and it's going to be good no matter how much you scale that horizontally. AI is really good at the things I personally struggle with, like finding the right way to access data that's hidden deep in a JSON object or remembering the syntax for some random piece of code that I have to write.

Now, I'm going to show you a clip of Matt Welsh's talk, and he actually does some math to figure out the difference in cost between a human programmer and an AI programmer. Take a look.

AI Coding Has Limitations

Today, AI coding still has a lot of limitations. One of them is context window limitations. How do you take huge codebases and allow a large language model to understand the entire codebase all in one go? You can't simply copy the whole codebase; you're just going to be limited by the token size. And even if you were able to fit an entire codebase in a prompt, there's this thing called "Lost in the Middle," where basically large language models can remember things at the beginning and at the end of prompts but often struggle with remembering things in the middle.

Right now, the biggest bottleneck for AI getting better is data. Companies don't want to share their code, and humans can only write so much code. But that's going to change, and I'll explain that in a minute. Lastly, large language models are often not up to date with the latest APIs and SDKs, and current solutions to fix that, like retrieval-augmented generation and web crawling, are pretty brittle.

But Getting Better Quickly

It is getting better, and we're going to have this snowball effect, especially with data and synthetic data. So, I mentioned the current bottleneck is data, but it's also compute. However, I'm not even worried about compute; the compute bandwidth will grow, especially with all this investment in silicon. That's why Nvidia is a massive company now. And the data will grow too. I've been talking a lot about synthetic data recently, and this is a great example.

First, as humans are able to write more code with AI tools assisting them, all of that code can go back into models to train them to be better. Not only that, as AI writes code at a much faster rate than a human ever could, all of that new code will go back into the model to make it better at coding. So, we do have this nice snowball effect as everybody becomes more productive at coding, and more code gets written. All of that code will be fed back into the AI models to make them better.

So, there are a few solutions to the current limitations. One is codebase mapping or compression, basically taking massive codebases and mapping them using shorthand that large language models can understand. A project that I've reviewed that does this really well is called AIDER, and they use something called Universal CAGs to define in shorthand all of the methods and all of the code that you have in your codebase. Then, they are able to feed that very compressed mapped version into a large language model so it can actually understand an entire codebase. This is also why most AI coding projects want you to start from scratch and can't iterate on existing codebases; it's for this exact problem. But this problem will be solved, whether it's from increased context sizes, solving the "lost in the middle" problem, or mapping and compressing codebases so that large language models can understand them easily. Additionally, we're going to be fine-tuning models specific to coding tasks, making models that were already good at coding even better.

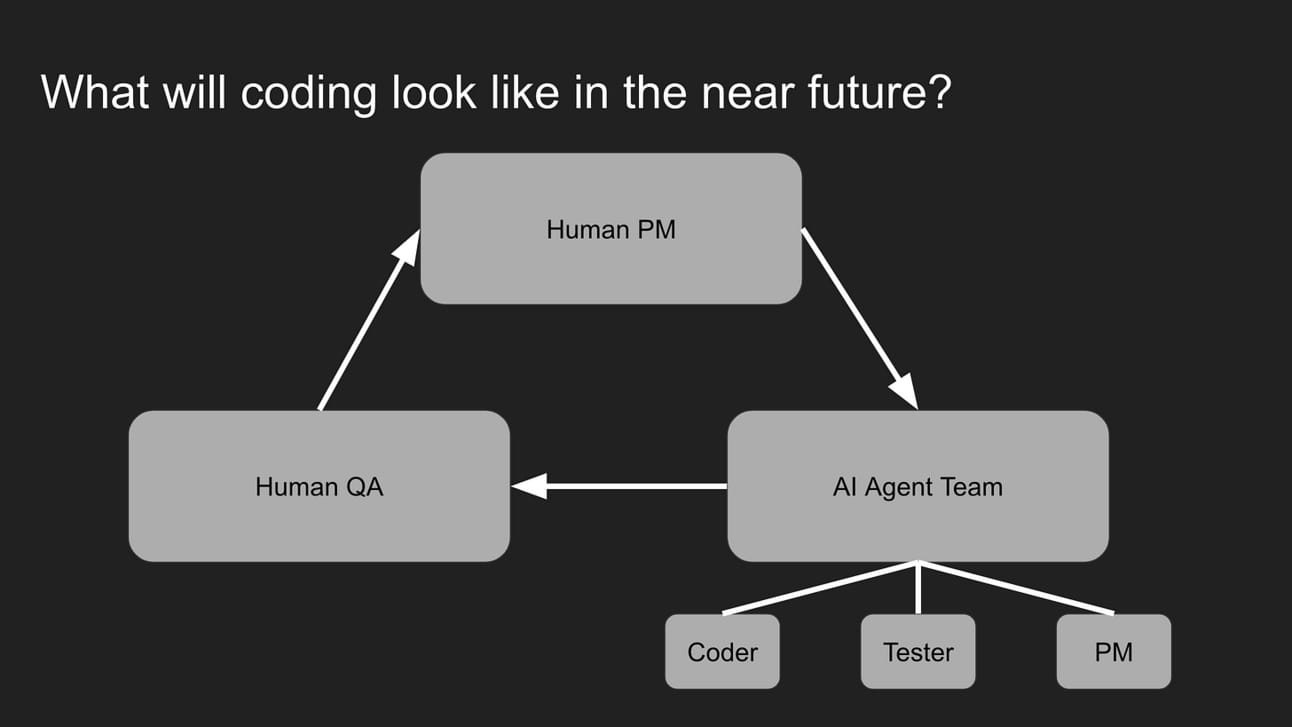

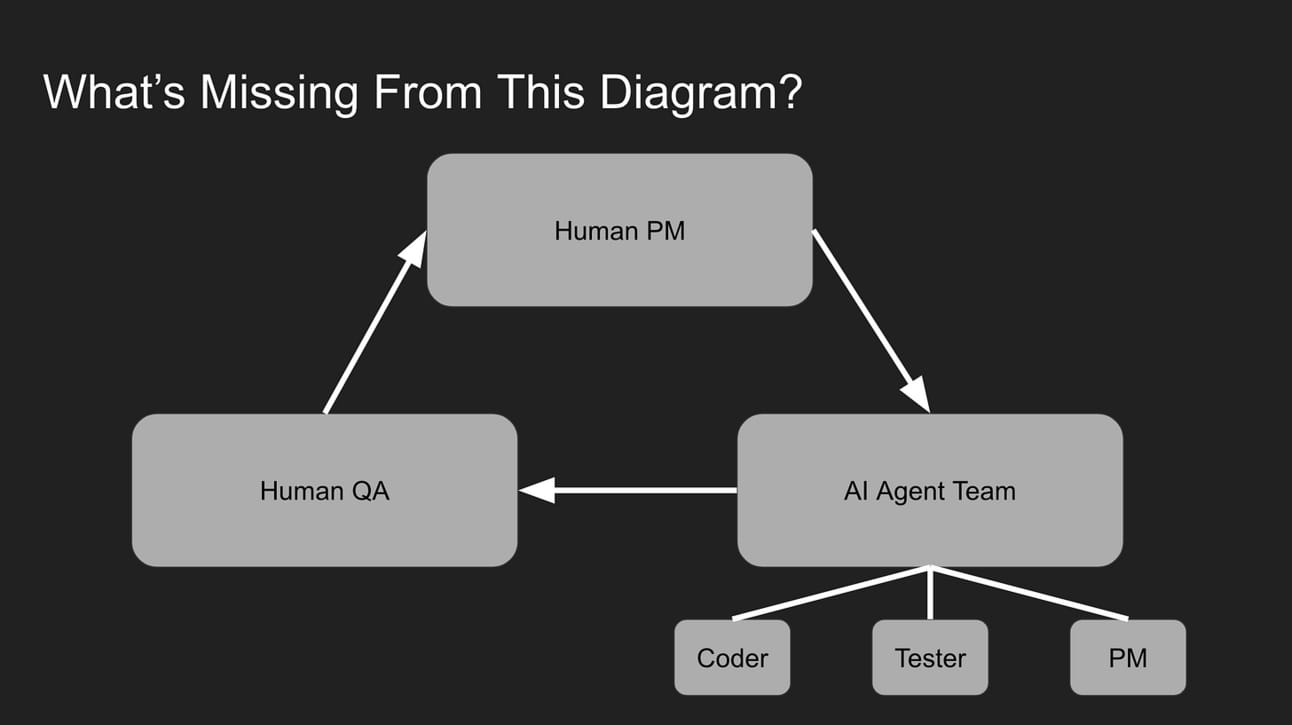

What Coding Will Look Like Soon

It’s Already Happening

And Long Term?

I see it as being direct natural language to computation, nothing in between. So, what does that mean? Let's talk about it. At the top, we have humans, and they are just going to speak in natural language, whatever language they want. That language is going to be processed and computed by the large language model. Then, we're going to have end devices, whatever device it is, whether it's lights around your house, your car, computers, phones, anything. The large language model is going to program those devices to do exactly what we want. So, the large language model is the operating system. There are no programmers anymore; humans are the programmers. And since all we have to do is say what we want, I wouldn't even consider that programming at that point. So, there are no programmers, no teams, just humans talking to large language models.

So, how do we get to this point? We need large language models to get really good at understanding what humans want and converting that into code. And they're already really good at that. Today, it's the worst it's ever going to be; it's only going to get better.

The Timelines

Thanks for reading!